AI tools have become our trusted companions—helping us write, design, and even make decisions. But have you ever stopped to wonder: Are these tools truly unbiased?

The truth is, AI is only as good as the data it’s trained on, and that data comes from humans—flawed, complex, and often biased humans. From subtle stereotypes to glaring inaccuracies, bias in AI tools can influence everything from the ads you see to the recommendations you get.

So, how do you spot these hidden biases? And, more importantly, what can you do about them? Let’s dive in.

What Does AI Bias Look Like?

Bias in AI doesn’t always announce itself—it often sneaks in through the cracks. Here are a few ways it can manifest:

- Stereotypical Outputs:

- Ask an AI to “draw a doctor,” and it might default to a male figure. Ask for a “teacher,” and it might show a woman.

- These patterns reflect societal biases embedded in the data AI learns from.

- Skewed Search Results:

- Search for “successful entrepreneurs” and see how often certain demographics are overrepresented.

- AI isn’t intentionally biased—it’s just amplifying trends from its training data.

- Language Biases:

- AI might associate certain adjectives (e.g., “strong” or “beautiful”) disproportionately with one gender or ethnicity.

- This subtle reinforcement can perpetuate stereotypes without us even realizing it.

Why Does AI Bias Exist?

AI doesn’t have opinions—it learns from patterns in data. The problem? That data is created by humans, who are anything but neutral.

- Historical Biases:

- Training data often reflects historical inequalities, such as underrepresentation of certain groups in media or academia.

- Imbalanced Datasets:

- If an AI system is trained on predominantly Western, English-language sources, it might struggle with cultural nuances or other perspectives.

- Design Choices:

- Bias can also stem from the goals and assumptions of the developers themselves. If diversity isn’t a priority during development, it won’t be reflected in the final product.

The Real-World Impact of AI Bias

AI bias isn’t just a theoretical problem—it has real consequences.

- Hiring Algorithms: AI tools have been found to favor male applicants over female ones based on biased data.

- Facial Recognition: Early AI systems were significantly less accurate at identifying individuals with darker skin tones, leading to concerns about fairness and safety.

- Misinformation: Bias can skew the way AI-generated content presents information, reinforcing harmful stereotypes or spreading inaccuracies.

How to Spot Bias in AI Tools

The first step to addressing bias is learning how to identify it. Here’s how:

- Ask Questions:

- What assumptions does the AI seem to be making?

- Are certain groups underrepresented or stereotyped?

- Test with Diverse Inputs:

- Try prompts that reflect a range of perspectives (e.g., “Write about a family from [different cultures or backgrounds]”).

- See how well the AI adapts to requests outside its “comfort zone.”

- Compare Results:

- Use multiple AI tools for the same task and analyze their outputs.

- Differences between tools can reveal biases in their training datasets.

How to Mitigate Bias

Spotting bias is important, but what can you do about it?

- Be an Active Critic:

- Don’t take AI-generated content at face value. Always question its accuracy and fairness.

- Diversify Your Tools:

- Use AI tools developed by teams with a strong commitment to diversity and inclusion.

- Give Feedback:

- Many AI developers welcome user input. If you spot bias, report it—it helps improve the system for everyone.

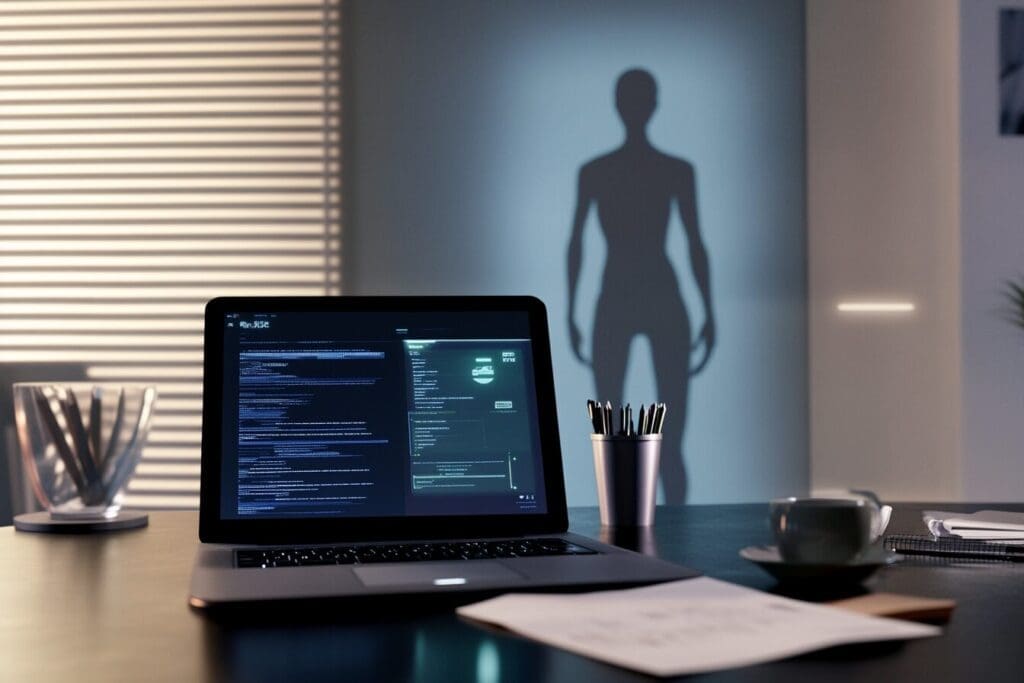

Humans Are the Key

Here’s the bottom line: AI isn’t inherently biased—it reflects the flaws in its design and training. That’s why human oversight is crucial. We need to remain vigilant, questioning the outputs we see and pushing for better, fairer tools.

AI has the potential to be a powerful force for good, but only if we hold it accountable. The responsibility doesn’t just lie with developers—it’s up to all of us to demand transparency, diversity, and fairness in the systems we use.

Your Turn

Have you ever noticed bias in an AI tool you’ve used? How did it affect your experience? Share your thoughts and examples in the comments—we’d love to hear your perspective!